Logs¶

Overview¶

AMI writes to 3 log files by default:

-

AmiOne.log, contains human readable logs of actions taken by the AMI instance, errors are logged here -

AmiOne.amilog, contains logs of the status of AMI over time (memory consumption, trigger run times, etc.) to be read in the Log Viewer layout. -

AmiMessages.log, contains a record of all messages sent into AMI, usually by AMI Clients

Log Viewer Layout¶

Introduction¶

3forge has a log viewer layout that allows developers to quickly understand resource usage across a single session and can also be extremely helpful with diagnosing performance or memory issues. The layout provides charts detailing the following areas: Messages, Memory, Threads, Events, Objects, Web, Web Balancer, Timers, Triggers, and Procedures.

Click here to download the layout or contact support@3forge.com.

How to Use¶

-

Download and load this layout via File -> Import (copy and paste the text) OR File -> Upload (file)

-

Go to Dashboard -> Data Modeler

-

Edit the existing datasource titled AmiLogFile, give the path to a directory that contains the log files. You can use absolute path or relative path (relative to AMI's root directory)

-

Click Update Datasource. If successful, it will tell you the number of tables, or files in this case, in the directory.

Using AmiOne.amilog file¶

This file is useful to view overall diagnostics on AMI

-

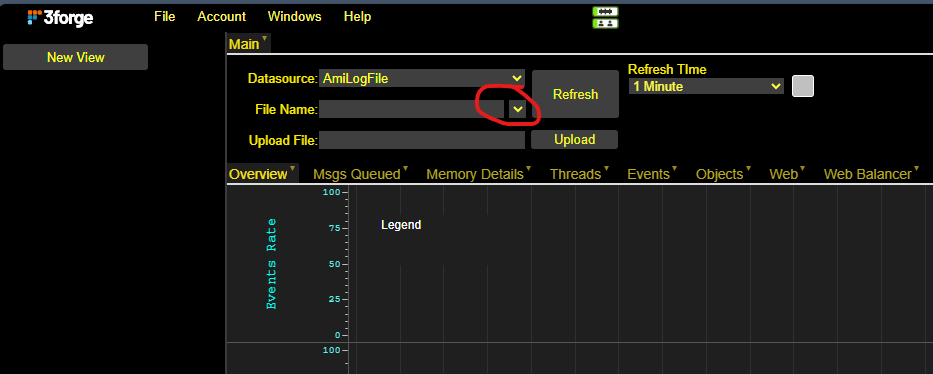

In the top left corner, click on the down arrow as seen in the screenshot below. In the drop down, select the .amilog file that you wish to use.

-

Feel free to switch between tabs to see different information. You can also adjust the size of the plot/legend by dragging the edge out.

Using AmiOne.log file¶

This file is usefil to view query performance on AMI

-

Go to Windows -> AmiQueriesPerformance

-

type in the file name that ends in .log, e.g. AmiOne.log

-

Click on Run Analytics on the right side of the field.

-

Go to the Performance Charts tab.

-

You can change the Legends' grouping in the top left panel; filter the chart dots with the bottom left panel. Feel free to sort the table on the bottom panel to suit your needs.